Python Web Page Scraper

A compilation of page scrapers and parsing data for databse injection

Python Collection

- Site A – Happy Faces

- Site B - Flask Sales Generator

- Site C – License Spring API

- [Site D – Scraper] (/projects/python-projects/scraper-master/)

Python Repository

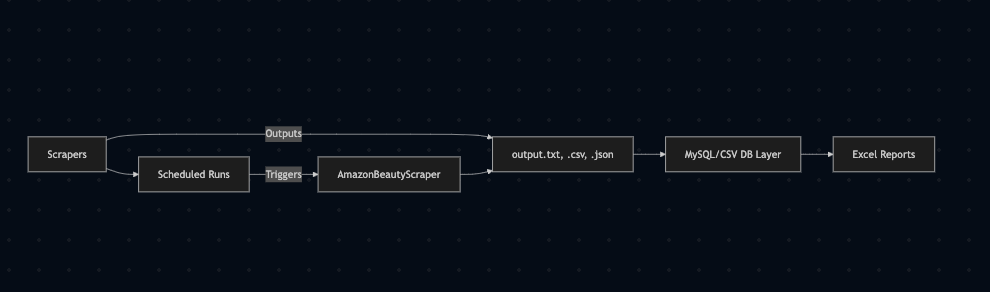

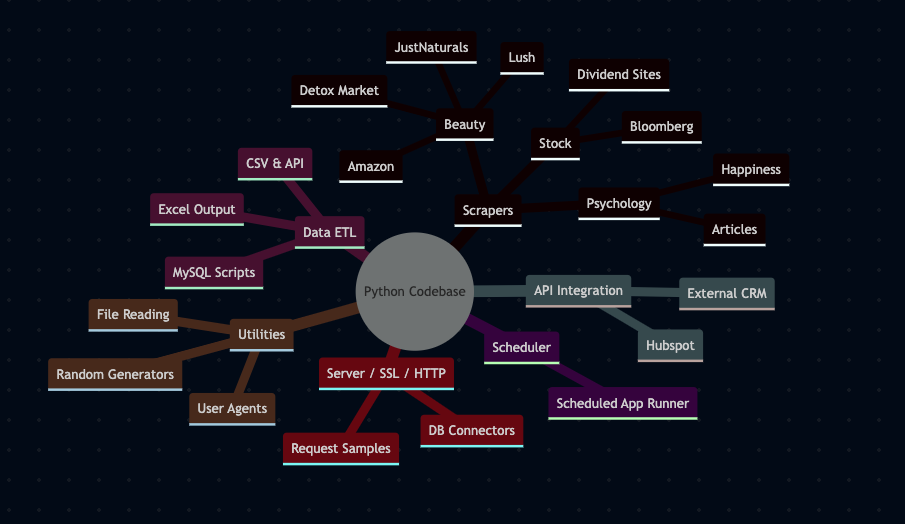

This project illustrates the body of code repository for useful snippets and functions of python code blocks from scrapers to ssl, database from past working examples Top level Python code organized and ranging from scraping, database, API connection files for reference purposes. The old school way of storing our code blocks. Some items are general scripts to apply to various urls and parse using Beautiful soup. Scraping exercises help with understanding the basis of spider crawlers, road blocks encountered and handling scraping blocks.

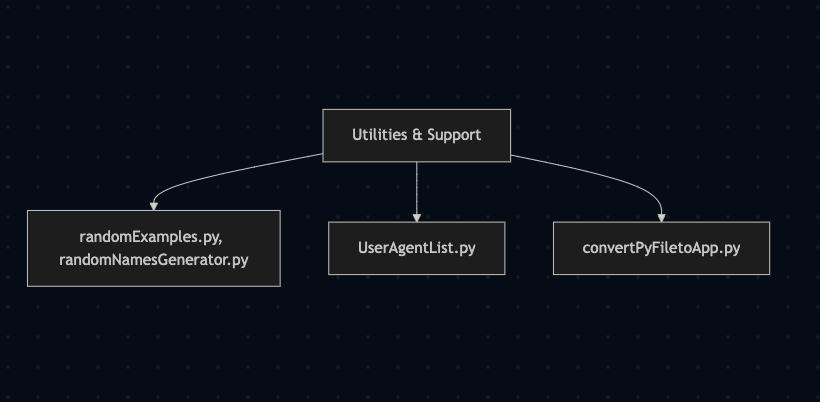

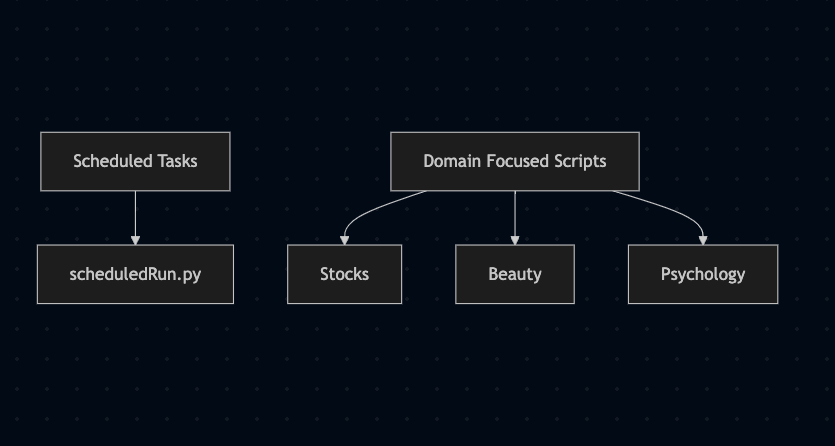

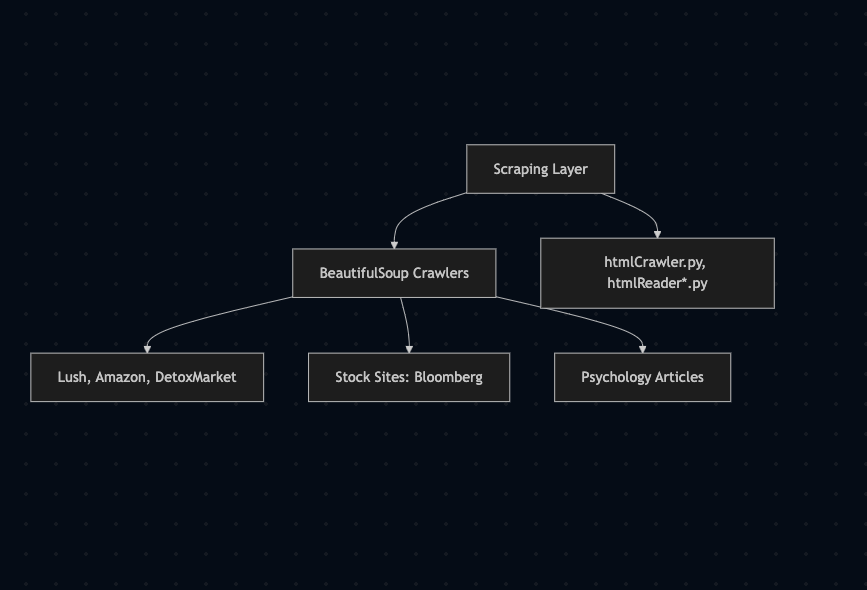

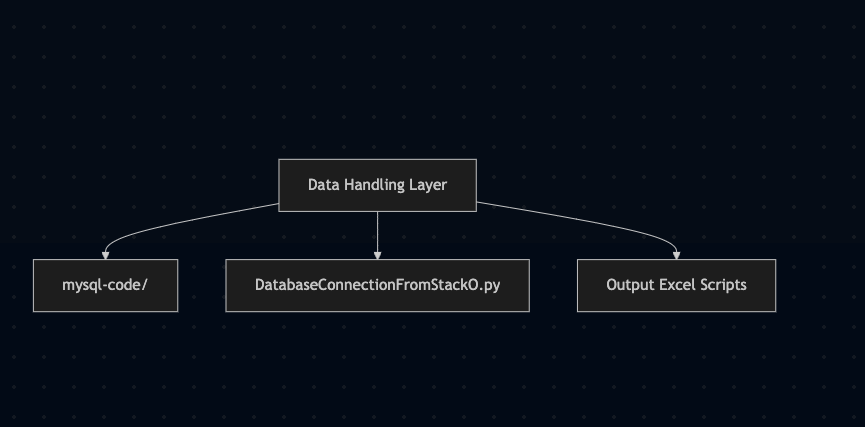

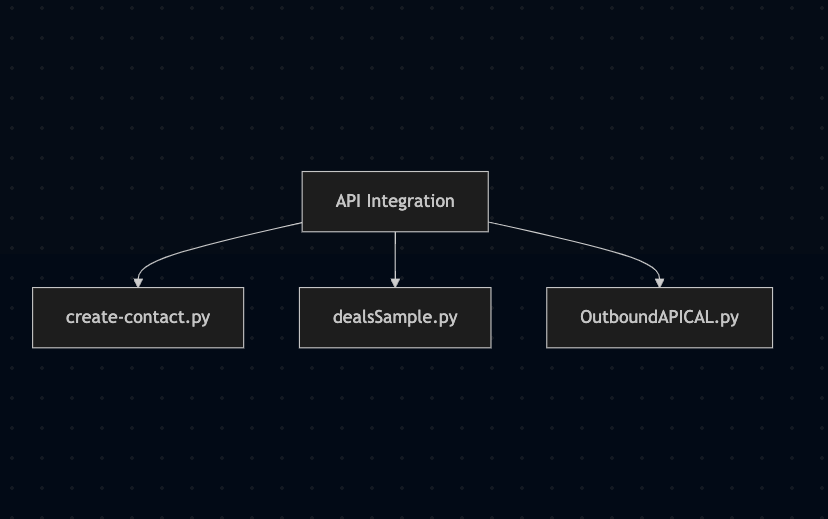

The below illustrations comprise of system level folder structures and files residing in each hierarchy. They help visualize the differnt components of an overwhelming repository of things.

The purpose of such a repo is to generate and follow small tutorials around python as well as a go to to speed your workflow process. However now that AI exists its less necessary to source code.