Python HappyFaces

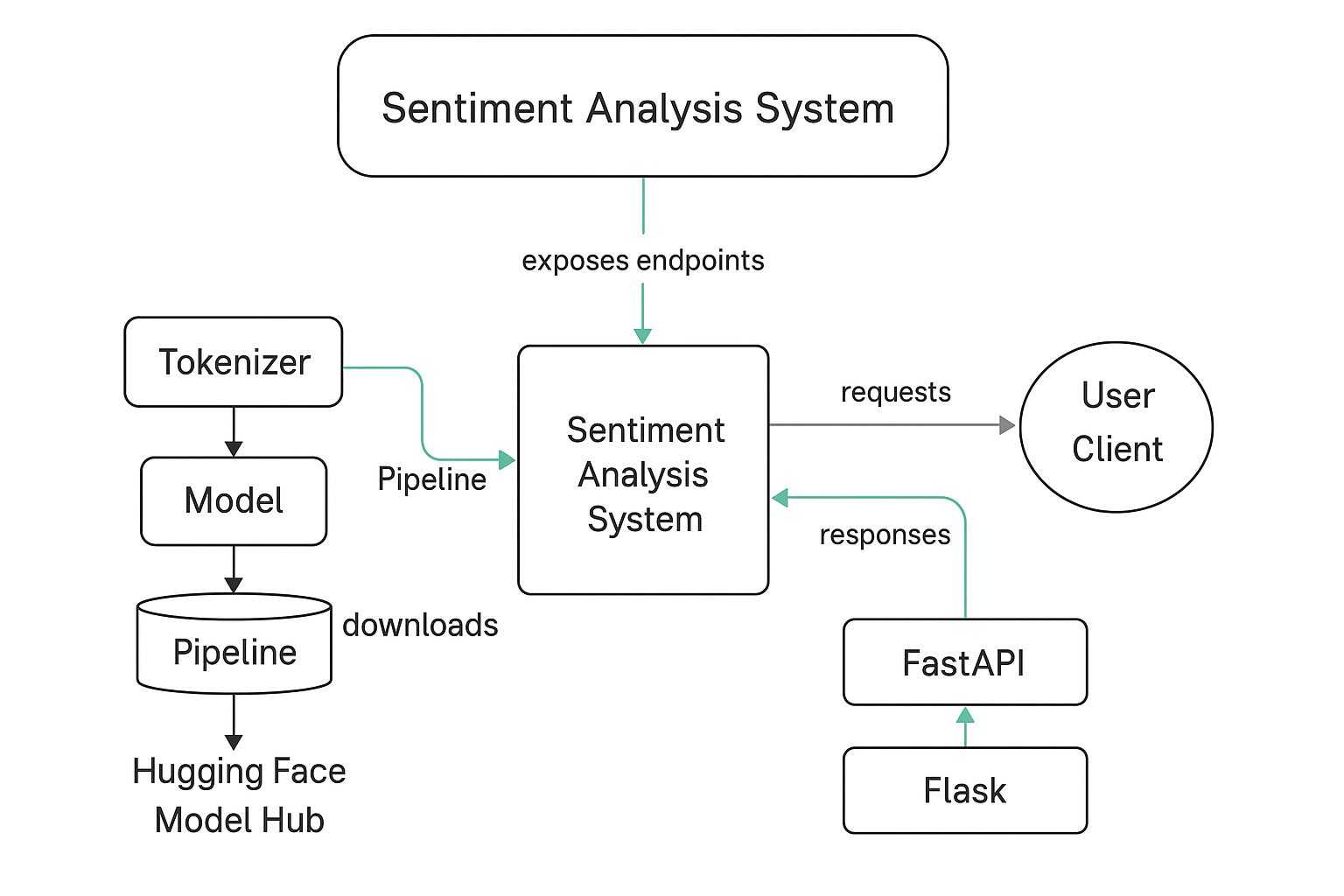

A local run/flask integration of the distilbert pretrained positive negative classification.

Python Collection

- [Site A – Happy Faces ] (/projects/python-projects/happyfaces/)

- Site B - Flask Sales Generator

- Site C – License Spring API

- Site D – Scraper

Python Repository

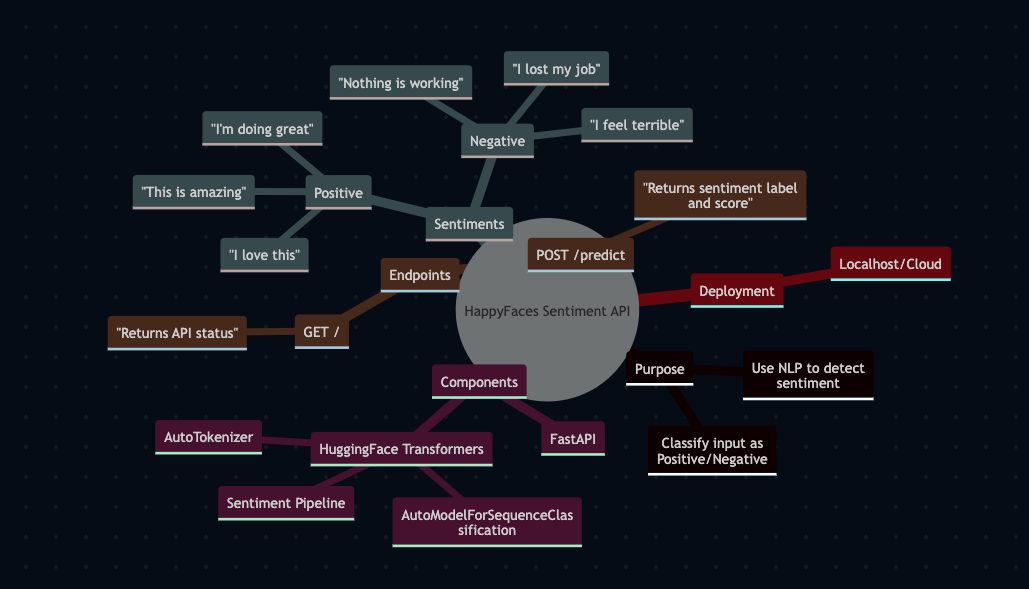

Exploration of the Python stack api application development in combination and to enhance happy2be api model with the Bert Sentiment distributed model using happyfaces huggingface/hub/models–distilbert-base-uncased-finetuned-sst-2-english. While the happy2be model does not have the proper distilbert this app utilizes this feature. It is intended to be deployed in an AWS EC2 instance for access.

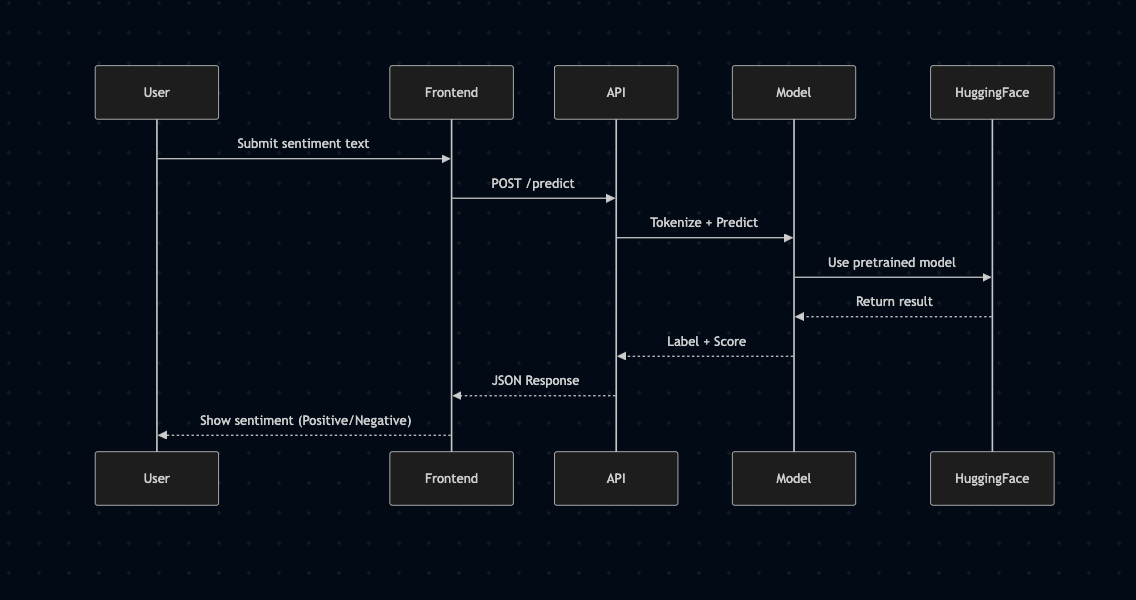

This repo explores the use of two different application packages for working with python apis. One file represents the FastAPI and the second uses Flask for the app build of for deployement to aws. This package is the python subset of the happy2be where it is intended to be called and information performed and sentiment sent in the back end to support the prompt / sentiment matching. of the happy2be.

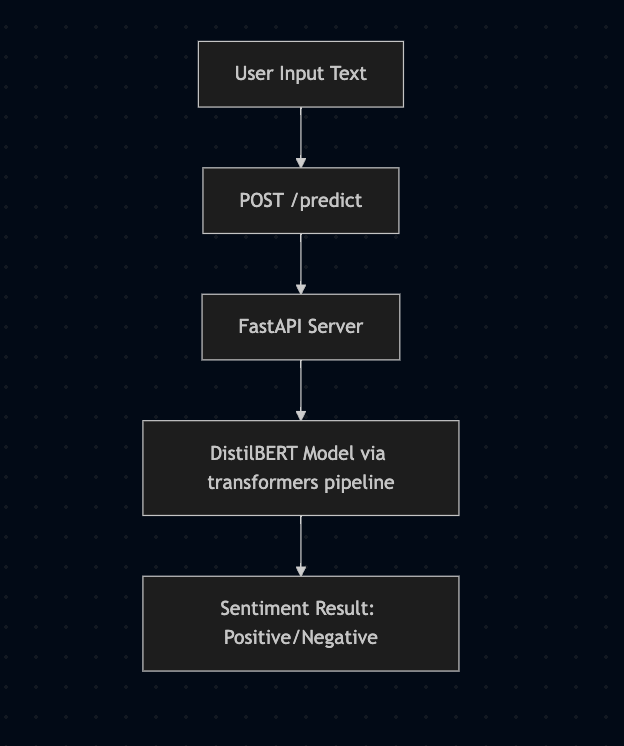

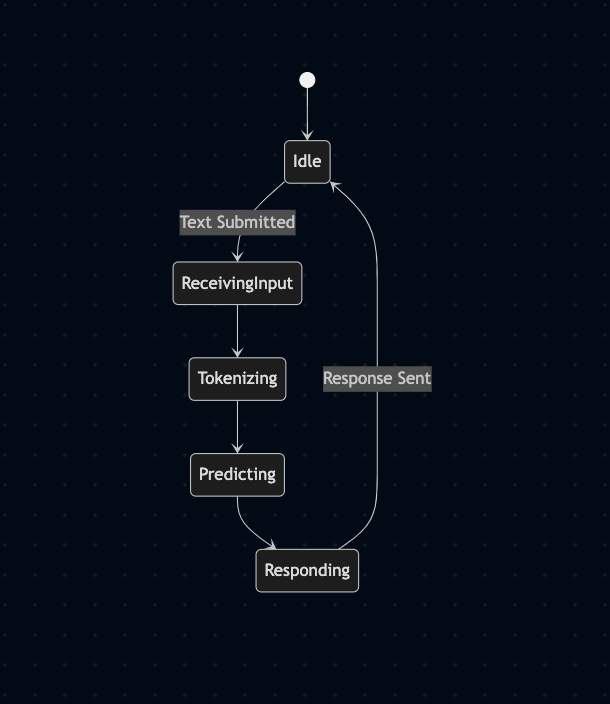

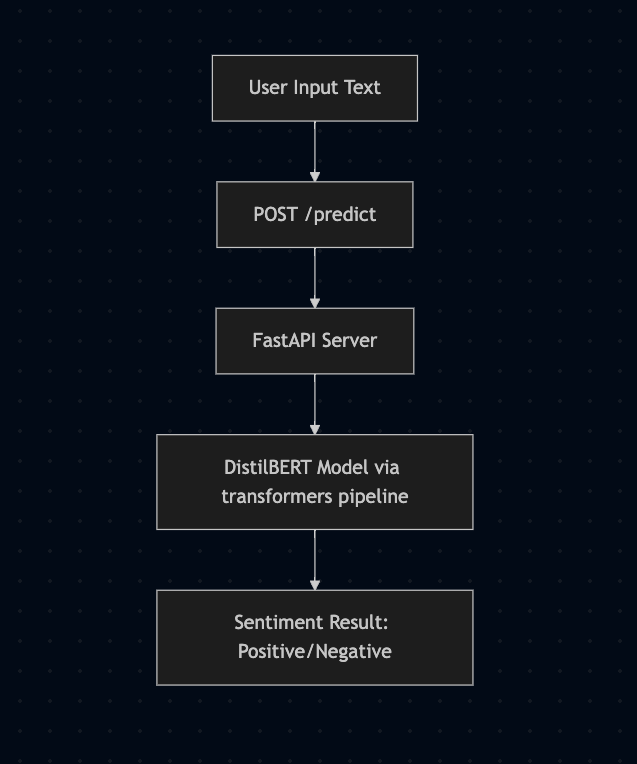

✅ Here’s what this project is doing: 🧠 Function. Loads a sentiment classification model from Hugging Face (distilbert-base-uncased-finetuned-sst-2-english)

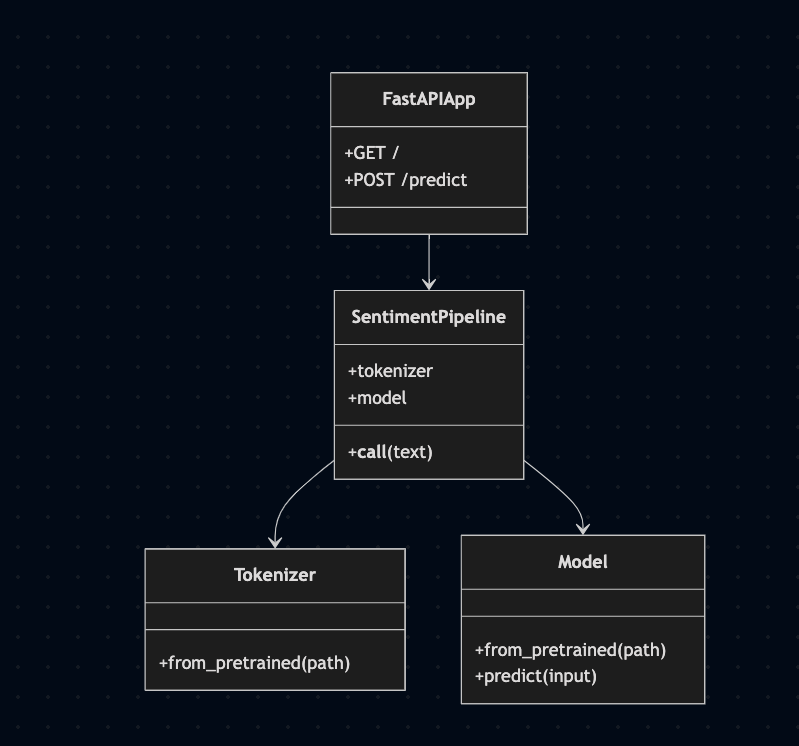

Sets up a FastAPI app with:

A home route (GET /) for testing A predict route (POST /predict/) that accepts a string input and returns the sentiment analysis result

🧪 At runtime It runs two test sentences and prints their results when the app starts. It runs continuously as a web service using FastAPI (e.g., via uvicorn or hypercorn).

| File | Purpose |

|---|---|

WorkingModel.py | Downloads base DistilBERT (no classification head). Good for fine-tuning or using embeddings. |

convertModel.py | Forces download and logs path for fine-tuned distilbert-base-uncased-finetuned-sst-2-english. Used for ETL or permanent local use. |

WorkingTokenizer.py | Implements a FastAPI server with / and /predict/ endpoints using HuggingFace pipeline for sentiment. |

happyface.py | Implements a Flask API for POSTing text to /analyze using the same tokenizer and pipeline. Simple wrapper to serve the model. |

The code is very simple for building out a small support API endpoint to connect sentiment analysis on message prompts.

Snippet of a python route included in the app is below:

@app.route("/analyze", methods=["POST"])

def analyze():

data = request.json

text = data.get("text", "")

if not text:

return jsonify({"error": "No text provided"}), 400

result = nlp(text)

return jsonify(result)

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5001)

📝 Notes / Suggestions ✅ FastAPI vs Flask: You’re testing two server frameworks for the same model. You can unify into one later. ✅ Model portability: Good idea with support/convertModel.py to find and optionally move model files. ✅ Tokenizer and model loading are correctly structured in both web apps. ✅ Ideal next step: Add test cases + Dockerize for portability if deploying to the AWS Instance environment.

Visit the python repo for more details: